Transcript for the Ideas Grants peer reviewer question and answer webinar. Recorded on Tuesday, 22 July 2025 10:30 pm - 12:30 pm (AEST).

Table of contents

Introduction and overview

Julie Glover 0:18

Good morning, everyone, and thank you all for making the time to attend the Ideas Grants Peer Review forum today. My name is Julie Glover and I'm part of the Executive team here at NHMRC. Before we begin, I'd like to acknowledge the traditional owners of the lands on which we're all meeting. I'm here on Ngunnawal country, but I know that we're on lands around the country, and I'd like to pay my respects to elders past, present and emerging, as well as Aboriginal and Torres Strait Islander people who are joining the meeting today. Thank you so much everyone for your time and commitment to the 2025 Ideas Grants peer review process. Your role as a peer reviewer or peer review mentor is critical to the success of the scheme. We really do appreciate your expertise and your dedication to this process. You will be supported throughout the process. We have a small but dedicated team here at NHMRC led by Dr Dev Sinha, who's here today along with Katie Hotchkis. They’re going to lead us through the proceedings, so I'd like to pass now on to Dev.

Dev Sinha 1:37

Thank you so much, Julie, and a very good morning to everyone here today. I'd also like to extend my thanks to every single one of you for taking the time to join this forum today, including our wonderful PRMS who are here today to participate in this very important session. We've had a really fantastic uptake for today's forum and I'm very excited to share with you what we've got planned for you today. I'd also like to acknowledge that this is an immense undertaking on your time and your expertise, and we always remain very grateful for your continued involvement with the Ideas grant scheme and for also helping us deliver the scheme to the quality and efficiency that is expected out of NHMRC's peer review system. Today's forum is not just a briefing. We hope that you've taken the opportunity to familiarise yourself with the assessment criteria and score descriptors for the Ideas Grand scheme.

Our aim here is to make this more interactive. We're going to engage with you on a couple of focus areas, particularly on comment writing and how to review comments.

So with that in mind, I'll keep the briefing part of the webinar very short and focused. I will take a few moments to discuss some general aspects of the Ideas grant peer review process, talk a bit about what's new this year, how to make the best use of the comment section, the budget comments and then explain the role of the peer review mentors during the assessment process and introduce our PRMS who are with us today. We then move on to an interactive module, which is a quiz using Menti. So for that quiz, please keep your smartphone handy as we will ask you to scan a QR code to access the quiz. We're going to show you some examples of anonymized comments and ask you whether you consider them appropriate or sufficient. Again, the idea is to generate some conversation on comment writing generally and hopefully provide you with some examples of what good comments look like and also what to avoid when you're writing them yourselves when as you assess the applications. At the end of the interactive module, we'll have some time for a Q&A session, and thanks to those of you who send in your questions as part of the registration process. This is also your opportunity to seek advice directly from the PRMs on any aspect of Ideas Grants.

Just some housekeeping before we start the session. The session is being recorded. We will make the recording available to everyone. However, the quiz and the Q&A session will be available to you later as an anonymized transcript rather than a recording, and this is so we can deidentify those who participate in the discussion or ask specific questions. So because of that, to allow the briefing part of the forum to be recorded without any interruption, we ask that you please hold all questions till we get to the more interactive sessions. Please also make sure that your full name is displayed. This helps us identify you for the purpose of paying sitting fees for attending today's session. And if you have any reservations at all about displaying your full name, please e-mail the Ideas Grants inbox (ideas.grants@nhmrc.gov.au) and note your attendance that way. The inbox is being monitored live, so please also reach out via the inbox if any other issues pop up during today's session. When we get to the interactive sessions, use the Q&A function if you can to put through your questions. Like we did last year, if you don't get through any questions by the end of today's session, we will answer them in the transcript. Lastly, as I mentioned just a little while before, please keep yourselves on mute if you can, unless you're participating in the discussion or asking a question.

With all that out of the way, I'll start off with some feedback from the 2024 Ideas Grants Peer Review Survey and all the different things we've tried our best to action this year. So firstly, as you might already noticed if you peer reviewed for us last year, due to the really positive feedback we received on the interactive nature of last year's forum, this year our briefing is short with longer Q&A and focused on interactive modules and comment writing, which was actually identified as a training focus area in the survey for a few years now.

We continue to try very hard each year to reduce your workload, so for comparison the maximum workload for an Ideas Grants reviewer was 30 applications in 2020, 25 applications in 2021 and 22, and this decreased to 15 and 18 in 23 and 24. So this year also peer reviews will be asked to review no more than 16 applications. Again, based on your feedback this year, we've managed to keep the peer review period outside of the school holiday period for most States and territories. I will note that although we do try to do our best with this, sometimes there are unavoidable conflicts, and it means that we can't always avoid this overlap. However, it always remains a very important consideration in our planning and scheduling discussions.

Before we go on to the details of the scheme, you are reminded of the importance of confidentiality and privacy regarding aspects of the peer review process. All information contained in applications that you're Privy to is regarded as confidential unless otherwise indicated. This is enforced by the NHMRC through the deed of confidentiality that you sign when you accept the invite. A reminder again that that deed of confidentiality is a lifetime commitment. We also ask you to please familiarise yourself with NHMRC's position on the use of generative AI, AI tools for peer review, and this includes ChatGPT, Copilot, Gemini, or any other such tools that you may have access to. Peer reviewers must not input any part of a grant application or any information that you obtain from a grant application into a generative AI tool, as this would be a breach of their confidentiality. However, as we all know, the technology and risks in this space are constantly evolving at a really fast pace. So we will continue to monitor the rapid pace of development in generative AI and we'll continue to review and update our policy.

Ideas Grants 2025 peer review process overview

A very quick overview of the round this year. Applications to the round closed on 7th May and we had 2,380 applications received on that date. The COI process is now complete. Thank you very much to each and every one of you for getting through that stage so promptly. You would have received your allocated applications for assessment through Sapphire yesterday. Each application is being assigned to five reviewers for assessment wherever possible. A big thank you to those of you who've already started reviewing. It was fantastic to see this morning that people were already in there.

As I mentioned before, we've aimed to allocate no more than 16 applications for review to each peer reviewer. However, with late COI's and withdrawals, we may need to ask you to assess one or two more at a later stage. I'm not going to go through each step of the timeline here, but as you can see from this very general overview, it is really important to complete your applications within the given time frame so that the outcomes can be delivered to applicants in a timely manner before the end of the year.

So what else is new in 2025? We've listened to feedback from Research Officers wanting more visibility for researchers involved in peer review. We're now sharing peer reviewer details with RAO’s at the start and end of the peer review process. The aim in doing this is just to help RAO’s better manage your workload or other responsibilities for their researchers. This year again, we're giving you specific dates that we will need your involvement so you can plan your calendar around those dates. The dates for upcoming activities are on screen there. The caveat with these dates obviously is that they depend on the preceding activity being completed on time and any extensions and delays of one process obviously then has a cascading effect on everything downstream from there.

Continuing the theme of what's new this year, in response to feedback received from a number of peer reviewers, we've clarified in the application process that Ideas Grants proposals should not have a clinical trial or a cohort study as their primary objective. They're better suited to the CTCS scheme instead. We've also included a point about alignment with scheme objectives and outcomes as part of your assessment of Research quality criterion (criterion one), just so you can take this factor into account. I will note, however, that this is not a new policy in itself, this has always been an Ideas Grants policy. It's just a strengthening and clarification of that existing policy based on the feedback we've received from you in previous years in the comment sharing phase and we'll get to comment sharing a bit more later. Please note that this year you will be asked by your Secretariat if you've participated in the process and we strongly encourage that you that you please do participate.

Global Health Research

A very quick clarification on yet another issue that's again not a change in policy, but something that we've been asked about quite frequently in recent times, more so now that this is topical in the sort of international context, is the assessment of research proposed to be conducted overseas. Please note that Ideas Grants can be funded for research conducted overseas. What you as peer review should note is that the grant activity proposed to be conducted overseas is critical to the project but also note that the CIA themselves must be based in Australia for at least 80% of the grant duration. Funding for overseas research support staff can also be considered as long as all those budgets are aligned with NHMRC's direct research cost guidelines.

Assessment criteria

I'm going to speed through these next few slides as this will be material that's already been shared with you in your assessment packs. So first, a quick look at the assessment criteria for Ideas Grants. We will be getting into each of these in a bit more detail when we discuss comments on each criterion via the Menti quiz.

A bit on comments, which is one of the prime areas of focus for this session today. As peer reviewers, you need to give applicants constructive feedback based on assessment criterion and score descriptors. And like I said, we'll be looking at some anonymised examples very soon. Your comments reflect your expert assessment and not the NHMRC's views. And since your comments go directly to the applicant, make sure they're constructive, highlighting both strengths and weaknesses. If you spot a flaw, explain its implication and try and suggest improvements. Read the whole application before you score individual criterion, but scoring itself should be holistic. Don't penalise for something that's not mentioned in a specific section if that's been covered elsewhere. However, when you're writing your comments for a specific criterion, you make sure your comments themselves are relevant to the criterion that you're assessing.

Comment sharing

A little bit on comment sharing. Assessors view the comments provided by others who reviewed the same application. This process allows you to evaluate how your comments compared with those of the other assessors, those of those assessors who assess the same application. It also holds you accountable to your peers for the comments you provide. This may help you benchmark and increase the quality of your own comments in future rounds as well. This is also an opportunity for you to notify NHMRC if you notice an error or something inappropriate in the comments. So please let us know and we can follow up with the reviewer that provides those comments. It's always important to remember applicants receive your comments as written, so please think about the kind of comments you would like to receive if you were an applicant. As I mentioned before, this year your Secretariat will contact you to confirm if you've reviewed comments.

Some of you will probably know this as a recommendation from the Peer Review Analysis Committee, or PRAC, which was convened by NHMRC a few years ago. For the past few years, we now conduct an outlier screening check to identify scores that differ significantly from other peer reviewers. We do this by using statistical methods that were recommended by PRAC. Where outlier scores are identified, we will seek clarification from peer reviewers if needed. However, please do note that there often may be valid, acceptable reasons for an outlier score. So for example, they may reflect this your specific expertise or judgment.

And therefore, it's not necessarily incorrect at all. This is just an extra quality assurance process to ensure that the outlier score is not a result of an unintentional error or a typographical mistake. And for those of you who prefer statistics as some light reading, please have a look at the PRAC report on our website through the QR code on the slide.

Budget reviews

A very quick look at Budget reviews. When reviewing the application budget, you may notice that a particular budget item like a PSP or a or a research cost is not sufficiently justified by the applicant or may not be in line with the proposed research objectives. So things that you should consider here are the whether the Budget requests align with NHMRC DRCs, whether the requested items are necessary, have they been justified? Do they represent value for money? I've got an example here of a clear, specific, actionable budget recommendation and an unclear version of the same recommendation that makes it very difficult for us to action. I will give you a couple of seconds to read them on screen while I note that budget comments are reviewed by NHMRC research scientists before any reductions are made. So a clear recommendation from you makes our jobs a whole lot easier.

Peer review Mentors (PRMs)

I'd like to introduce a peer review mentor process for this year. The PRM’s primary role is to provide advice and mentoring during the assessment phase of peer review. They are available to provide advice to you on broad questions and effective peer review methods practices. They can advise you by sharing their own experience, share their tips and tricks wherever relevant with you as well. However, do note that PRMs themselves do not assess applications and cannot provide advice on the scientific merits of individual applications that are with you for review.

When you raise your questions today, please make sure if you're talking about specific applications, make sure they're anonymised and focus on the broader advice instead. Again, if you run into issues that you want to seek advice on during the peer review process, please contact your Secretariat in the first instance and they will get in touch with the peer review mentor on your behalf.

Finally, keep your Secretariat informed if issues arise, if you require clarification or assistance, or if you wish to identify any eligibility issues, integrity issues, all that sort of thing. We're here to help you. You can contact your secretariat to seek advice from PRMs where required. The PRMs will be hosting 2 drop-in sessions for you on Wednesday 30 July and Tuesday 5 August. We will be sending you more comms about these sessions shortly after this this session today.

So with that, I'm very pleased to introduce our 2025 PRMs who have been patiently waiting where I've been talking so far. Please welcome Doctor Anna Trigos, Professor Stuart Berzins and Doctor James Breen. Doctor Nicole Lawrence is also a PRM for us this year, however, unfortunately she couldn't be with us today to do some other commitments. I would now like to invite up PRMs to introduce themselves very briefly along with any general tips and ideas you may have then we can move on to the next module. Stuart, since this is not your first time, I might throw to you first to introduce yourself.

Federation University

Stuart Berzins 18:27

Thank you, Dev, and welcome everyone to the to the session. So just to introduce myself, I'm an immunology researcher and a group leader. I'd like to think I've sort of got a range of experiences that allow me to sort of put myself in other people's shoes for helping you out. I've worked overseas as a researcher and in metropolitan regional areas in Australia. As a as a reviewer, I've been involved with NHMRC reviews since well the early 2000s, most recently as a as a peer review mentor. I guess I've been doing it for quite a long time, so I'm happy to help wherever I can. I guess my main advice would be try and make sure that you're aligning your views with the recommendations, so with the assessment criteria or score descriptors. Where the review system can sort of fall into trouble, I guess, is if everyone kind of thinks they would do things differently or this is my opinion and things like that. The last thing we need is, 200 different sets of opinions that are also slightly different because it makes it unfair for the applicant. So try and work from the perspective of what are the score descriptors telling you about this? How do you assess it in terms of the assessment criteria that you've been given?

The Kids Research Institute Australia

Jimmy Breen 21:27

My name's Jimmy Breen. I'm chief data scientist of an Indigenous genomics group here on Kaurna land, which is Black Ochre Data Labs. I'm affiliated with the Kids Research Institute, which is in Perth, but I live in Adelaide, but I'm moving to Perth and my academic affiliation is to ANU in Canberra. I am much like Stuart; I've got a lot of experience in doing lots of different reviewing both with ARC and NHMRC. But also more recently, I've done a lot of international reviewing for Wellcome Trust, NIH, and I'm also on a grant in Canada for the New Frontiers Research Fund as well. So you know, have a lot of experience looking at assessments of different things and also trying to address them in my own work as well.

I do lots of multi genomics of complex disease work for Indigenous people for that matter and also given my experience in sort of the Indigenous genomics field, although I'm non Indigenous. I have done quite a bit of work in assessing Indigenous Research statements, which are really key, important sort of topics for the research that we do here in our work, but also across Australia. So I can always answer questions for that. My only advice as well, just going on what Stuart says, I think a lot of people, you'll be surprised how similar you are in a lot of your comments a lot of the time, and so you can kind of trust your gut in terms of assessing of the criteria. If you've read them clearly and properly and you've read the application clearly, you should be similar to the other people who are assessing as well.

Peter McCallum Cancer Centre

Anna Trigos 22:20

Welcome everyone. I'm Anna Trigos and I'm a group leader at the Peter McCallum Cancer Centre in Melbourne. I have grant review experience with NHMRC and other funding schemes as well, although potentially not as much as Jimmy and the others. So I'm very happy to be part of this forum. My work is mainly computational, computational biology, although I work very much multidisciplinarily with lab scientists as well as clinicians, scientists and clinician researchers. So, when it comes to multidisciplinary research, that is kind of where my headspace is often at. In terms of advice, I guess is to have a little bit of fun reviewing the grants. You get the opportunity to see some very cool science, very cool ideas. I always try to be a glass half full a little bit with my reviews. I mean, everyone at the end of that application is people who put the heart and soul into it and your comments are going to decide really in a way what diseases in the future will have new treatments, and which ones will not. So, I think it's very, very important to keep very much an open mind and a little bit positive and about things and enjoy the process.

Dev Sinha 24:00

On that very positive note, that was just amazing. Thanks, Anna. I'll just pass on to Katie for the mentee quiz. Take it on from here, Katie.

End of recording.

[The remainder of the live modules are available as a deidentified Q&A than a video recording to preserve the anonymity of the peer reviewers participating in them]

Interactive Quiz held via Menti – Peer Review Comments

The following quiz was held via Menti.

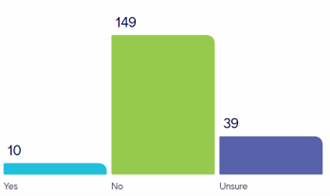

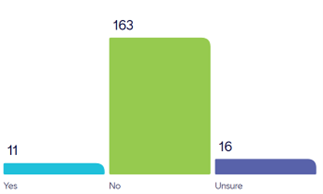

The purpose of the interactive quiz was to show a range of assessor comments that are similar to real comments we have received in the past. They have been edited and anonymised. Attendees were asked to read the comment and consider whether they thought it is appropriate or sufficient against the Ideas Grants score descriptors. Participants were given the option to vote Yes if they believed the comment is sufficient or appropriate, No or Unsure.

Question One

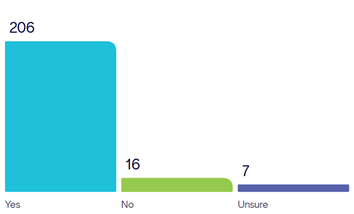

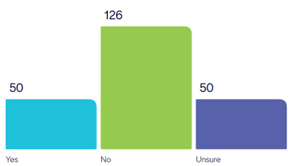

Do you think this assessor comment is appropriate and/or sufficient? (Research Quality)

Strengths. Clearly written with sound rationale underpinning the aims. The experiments are well powered. The techniques employed are state-of-the-art, leveraging advanced and reliable genetic methodologies to alter gene and protein expression in-vivo. The research area aligns well with the team's expertise and previous work, making the proposal internationally competitive. Weaknesses. The goals and scope of work needed in the studies in Aim X was not well defined, it was not clear how the localisation / studies would inform on the mechanism at the level of detail provided. It also wasn’t clear how many types of studies or, how to rationally prioritise them.

This comment highlights the strengths and the weaknesses of the application against the Research Quality criteria, which requires a well-defined and coherent research plan and the need for clarity in the study design and approach.

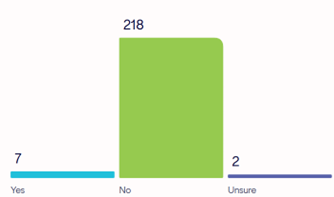

Question 2

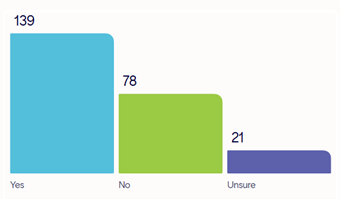

Do you think this assessor comment is appropriate and/or sufficient? (Research Quality)

The project aims and proposed research plan are supported by an extremely well justified hypothesis, are well-defined, extremely coherent, and have a near flawless study design which would be extremely competitive with similar research proposals internationally. Scientific and technical risks have been well identified with appropriate mitigation strategies.

The comment is a copy of the score descriptor and therefore doesn't actually provide any application specific feedback to the applicant in terms of the strengths and weaknesses of that application or demonstrate that the reviewer read the application in in great detail.

When providing comments, consider the recipient's perspective. The assessment criteria are general, but the application is specific. Offer specific guidance on what was done well and what could be improved. Early career researchers need specific feedback on their applications to improve and reapply in the future.

Explain why a particular score was given, detailing why an application received a certain score and what could have been done to achieve a higher score. Provide both positive and negative feedback. Highlighting even minor weaknesses can be valuable for the applicant's future applications. The main value of comments comes from explaining what the applicant could have done differently or what was particularly good about their application. This helps the applicant understand their strengths and areas for improvement.

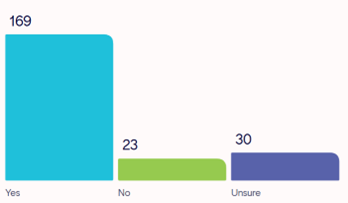

Question 3

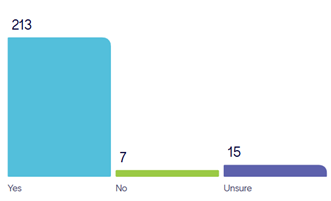

Do you think this assessor comment is appropriate and/or sufficient? (Innovation and Creativity)

I found some of the stated innovative aspects of the proposal to be over-stated or lack clarity; e.g. identify possible interventions far beyond [technique]. It isn’t clear how the application will achieve this. Interventional studies in [model] could be something that would strengthen this idea. The overall concept is sound but much is already known on this topic, therefore in my view, the innovation is moderate. The techniques and technologies being used are standard and don’t represent a high level of innovation.

The NHMRC defines innovation and creativity for the Ideas Grant scheme as health and medical research that seeks to challenge and shift current paradigms or have a major impact on a health research area through one or more studies. This comment effectively summarises the assessment of the criteria, focusing on the criteria and providing suggestions on how to strengthen the application against that specific criterion.

There are many positives about this comment, but there are a couple of areas that could be improved. Generally speaking, it's important to avoid making the feedback sound like a personal opinion. As an assessor, the role is to relay to the applicant whether the application aligns with the score descriptors and the assessment criteria. Therefore, it’s important to present as an independent adjudicator, not as someone offering a personal opinion. Depersonalise the feedback a bit and making it clearer where the alignment with the assessment criteria is. This might involve tweaking the language slightly. Overall, the message is good, but there are a few areas where improvements could be made.

When providing feedback, focus on the content presented in the application itself. It's appropriate to suggest improvements related to what has been presented but avoid rewriting the application or imposing personal opinions. Instead, assess the application based on its own merits and provide constructive suggestions within that context.

Question 4

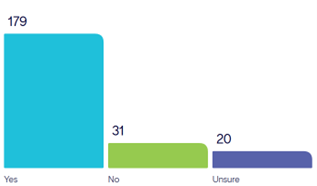

Do you think this assessor comment is appropriate and/or sufficient? (Significance)

This project is highly significant and promises to lead to strong publications by improving [disease] prognosis. Current [techniques] are labor-intensive and costly. The novel [platform] proposed may revolutionize [technique] by enabling [mechanism]. This approach holds great potential for clinical evaluation of [conditions] and could attract substantial funding and collaborations. The project aims to significantly improve [technique] sensitivity and reliability, providing more accurate [condition] prognosis and optimized therapeutic regimens for [disease] patients that is very highly important

NHMRC defines the significance of the Ideas grant scheme as the extent to which the outcomes and outputs will result in advancements to or impact on the research or health area. This comment uses some of the words from the score descriptors for consistency, but it also summarises the strengths and weaknesses of the application against the score descriptor. If there are weaknesses in the grant application, it's also important to provide any feedback to the applicant which could improve against those weaknesses and with assessing significance, it's important to bear in mind that how prevalent or rare a disease is should not have a bearing on how impactful the research may be.

The feedback suggests that the language used in the application aligns with a score of around 5, indicating that the project is highly significant but not at the highest level of positiveness. While the review highlights the positives, it lacks details on the weaknesses that prevented the application from scoring higher. Including comments on these weaknesses would have made the feedback more sufficient.

Question 5

Do you think this assessor comment is appropriate and/or sufficient? (Significance)

The proposal does not connect the significance of the proposed research to the categories outlined in the Idea Grant guidelines. Here are some suggestions to strengthen and explain these connections:

- How will the outcome lead to personalised [treatment]? The proposal should offer evidence and examples.

- Describe how the proposed research will advance the development of treatments, highlighting any preliminary data or related studies that support this claim. This will strengthen the connection between the proposed research outcome and the claimed significance.

- Explain how each significant aspect listed will directly benefit from the research findings.

Provide clear, evidence-based justifications to illustrate the potential impact of the research.

Providing examples of strengths and weaknesses, rather than just relaying assessment criteria, would be more helpful. The feedback lacked specific examples, making it less constructive. The feedback should highlight both positives and negatives, and rthe tone of the feedback could be more collegial while still being direct and helpful. Additionally, the feedback should be specific to the grant being assessed, rather than broad suggestions. It's also noted that preliminary data is not a requirement for the Ideas Grant scheme, and this should be clarified to avoid confusion.

Question 6

Do you think this assessor comment is appropriate and/or sufficient? (Significance)

I cannot see how this research will result in a therapeutic product that is better than existing treatments.

The feedback is considered lacking in detail and too brief. It is also seen as somewhat personal. To be more useful, the feedback should reference the content of the application, discussing its limitations or positives. The current comment provides very little useful information to the applicant.

Question 7

Do you think this assessor comment is appropriate and/or sufficient? (Capability)

The CIA is a promising researcher with high quality outputs achieved as lead author and relative to opportunity. The CIB and AIs provide all the expertise required for success. The CIA has highly relevant expertise to address the aims of the proposal. While the team has unique resources and facilities to undertake the experiments, they would benefit from dedicated FTE for [technique].

The capability assessment should focus on the ability of the applicants to perform the proposed work and not traditional track record measures. What matters is whether they have the skills and expertise to carry out the specific methods and the techniques required for that project. So it's reasonable to use publications and collaborations as supporting evidence for capability or experience in the relevant area. But they're not sufficient or decisive, and it's crucial to avoid being biased by the impact factor or prestige of the journals.

[Additional commentary by NHMRC: NHMRC also recommends the San Francisco Declaration on Research Assessment (DoRA) guidance on Rethinking Research Assessment.]

Question 8

Do you think this assessor comment is appropriate and/or sufficient? (Capability)

While the CIA has some capacity to lead the team and the CIB and AI bring some expertise required, the team lacks expertise in [condition1], which is very different to [condition2] and other [disease]. This is a major weakness of the team. The proposal does not address the facilities available to the CI team.

What was good about this comment was that it just pointed out that the proposal didn't address the facilities. So that was something that concerned the assessor. Capturing capability will be covered in the FAQs.

Question 9

Do you think this assessor comment is appropriate and/or sufficient? (Significance)

In comparison to the broader research field, the proposed research objectives align with existing approaches and are not expected to significantly alter the current paradigm or produce new breakthroughs in [disease].

The comment, while addressing the reviewer's overall concerns about the significance of the research could have been more helpful if it obviously included some application specific information. Was there a technique, specific technique that's already well known or addressed? What are the specific weaknesses about this application?

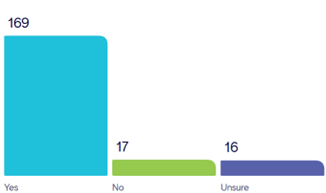

Question 10

Do you think this assessor comment is appropriate and/or sufficient? (Capability)

The CI team regularly publish in very high-quality journals (Nat Med, NEJM, Nat Comm, Cell, etc.) and project builds on these studies and uses approached that the team have very high expertise in. This clearly put the capability in the exceptional category.

We have talked about this earlier in our discussions, but the comment specifically references the quality of journals as a proxy for capability to undertake the project.

It's important to avoid being biased by the impact factor or prestige of the journal.

End of quiz.

The remaining portion of the Ideas Grants Forum was the Q&A session. The questions asked during the quiz and in the Q&A session are published separately.